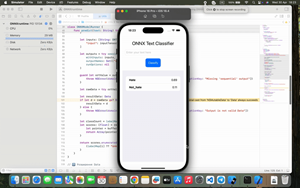

Inoxoft Launches Offline AI Tool for On-Device Text Classification

PHILADELPHIA, July 30, 2025 (GLOBE NEWSWIRE) -- Inoxoft, a custom software development company, has announced the release of WhiteLightning, an open-source CLI tool that enables developers to train and run fast, lightweight text classifiers entirely offline. This tool doesn’t rely on cloud APIs or large LLMs at runtime.

Built over a year by Inoxoft’s AI and ML engineering team, WhiteLightning was created to address a growing need: delivering intelligent, privacy-safe NLP to edge devices, embedded systems, and offline environments. Using a novel teacher-student approach, the tool distills LLM-generated synthetic data into compact ONNX models under 1 MB in size, ready to run anywhere.

“We built WhiteLightning to give developers full control over NLP and without the usual trade-offs,” said Liubomyr Pohreliuk, CEO at Inoxoft. “You don’t need a 175B model on standby. You need something that works offline, fast, and reliably, something you can ship.”

Why it matters

- Drastically lower cost. The tool uses LLMs just once for training (around one cent per task), avoiding ongoing per-query API fees.

- Compact model size. It’s small enough to fit inside mobile apps, routers, or embedded devices.

- Runs on minimal hardware. WhiteLightning is made for edge environments like Raspberry Pi or older phones.

- Fast and efficient. The solution processes thousands of inputs per second on standard CPUs.

- Cross-platform ready. This means consistent output across Python, Rust, Swift, and more.

- Truly offline. There are no cloud dependencies, data leaks, or vendor lock-in.

Under the hood

-

LLM-to-edge distillation. Converts task prompts into synthetic data, then a fast ONNX model.

- CLI-first experience. Simple Docker-based tool, one command to generate classifiers.

- Multi-language runtime compatibility. Supports Rust, Swift, Node.js, Dart, and more.

- GitHub-native DevOps. CI/CD with Flake8, Pytest, pre-commit hooks, test matrix in GitHub Actions

-

Secure by design – No local Python dependencies, environment-variable-based API handling.

WhiteLightning is not a hosted SaaS. It’s a production-grade CLI utility aimed at engineers who want precise, controllable, local-first AI capabilities without extra infrastructure.

Built and backed by Inoxoft

WhiteLightning is developed and actively maintained by Inoxoft’s ML engineers and OSS team:

-

Open-source license. GPL-3.0 for the tool, MIT for generated models.

- Community-led roadmap. Feature discussions and dev chat on Discord.

- Deployment-ready Docker image. ghcr.io/inoxoft/whitelightning

- Public CI/CD. All PRs tested through GitHub Actions, cross-runtime validations included

Try it yourself

WhiteLightning is available on GitHub with full documentation, test examples, and deployment templates.

For developers who need real-world NLP—fast, free, and fully offline—WhiteLightning offers a clean, powerful alternative to hosted LLMs.

Contact:

pr@inoxoft.com

A photo accompanying this announcement is available at https://www.globenewswire.com/NewsRoom/AttachmentNg/31f97979-3089-48c3-b2ab-7a1217a7dfa0

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.